TOC

Open TOC

info

远程仓库

https://github.com/oceanbase/miniob

2022 OceanBase 数据库大赛

https://open.oceanbase.com/competition/index

官网教程

https://ask.oceanbase.com/t/topic/35601006

第一届比赛预赛题目

https://open.oceanbase.com/train?questionId=200001

往届参赛选手经验

https://zhuanlan.zhihu.com/p/445201899

https://zhuanlan.zhihu.com/p/455956866

头歌

https://www.educoder.net/paths/e26a9zcj

setup

install gcc gdb make cmake flex bisoncmake -B build -DDEBUG=ONcmake --build build -- -j $(nproc)cd build./bin/observer -s miniob.sock -f ../etc/observer.ini./bin/obclient -s miniob.sock部分外部依赖通过 submodule 引入,官方提供了 build.sh 脚本用于初始化环境

log

gtest 测试中无法打印日志

日志在 observer.log.* 中查看

设置级别为 trace

storage

file 由一系列 pages 组成

page 的布局如下

- page_num

- data

frame 中存放 page,通过 BufferPoolManager 管理

BufferPoolManager 的成员如下

BPFrameManager,继承自MemPoolSimple内存池,使用 lru 策略- name / fd ->

DiskBufferPool,其中 fd 代表 file 的文件描述符

BufferPoolManager 全局唯一,用于打开 file 构造 DiskBufferPool

每一个 file 的第一个 page 的开始部分为 BPFileHeader

其余 page 可用于存放 record,其开始部分为 PageHeader,或者用于存放 index

使用 RecordPageHandler 和 RecordFileHandler 管理 record

overview

- network

- libevent

Server::recv- create

SessionEvent - add

- create

- seda (stage event driver architecture)

- 处理顺序在 observer.ini 中定义

- session

push_callback-Server::send- create

SQLStageEvent - handle

- query_cache (empty)

- handle

- parse

push_callback- done_immediateSessionEvent- handle

- done_immediate

SQLStageEvent

- resolve

Stmt::create_stmt预处理 insert / select / delete- handle

- plan_cache (empty)

- handle

- optimize (empty)

- handle

- execute

- set_response

SessionEvent

- set_response

- (default_storage)

SCF_LOAD_DATA

test

官方配有测试脚本,迁移到 https://gitee.com/oceandb-space/miniob-test 中

测试分类如下

DDL

- drop table

- unique index

- multi index -> drop / date

DML

- multi insert

- update

- select

- group by with aggregation

- order by

- expr

- sub query

- correlated

- uncorrelated

- join

- cross

- inner

field

- date

- null -> aggregation / cross join

- text

今年新增的测试用例

- typecast

- like

- update-select

- alias

- function

- clog

- show-index

analysis

create table

create table t1 (a int)

ExecuteStage::do_create_table() Db::create_table() Table::create("./miniob/db/sys/t1.table", "t1", "./miniob/db/sys", 1, ...) TableMeta::init("t1", 1, ...) FieldMeta::init("a", INTS, 4, 4, true) TableMeta::serialize() - json 格式 BufferPoolManager::create_file("./miniob/db/sys/t1.data") - Page -> BPFileHeader Table::init_record_handler("./miniob/db/sys") BufferPoolManager::open_file("./miniob/db/sys/t1.data", ...) - 构造与数据文件相关联的 DiskBufferPool DiskBufferPool::open_file("./miniob/db/sys/t1.data") DiskBufferPool::allocate_frame(0, ...) - 为 header page 分配 frame (hdr_frame) BPFrameManager::alloc(fd, 0) - 尝试分配 LruCache::get() - 根据 fd 和 page_num (BPFrameId) 寻找 frame MemPoolSimple::alloc() - 若找不到则分配 MemPoolSimple::extend() BPFrameManager::begin_purge() - 无法分配则开始驱逐 BufferPoolManager::flush_page() - sync 被驱逐的 dirty frame DiskBufferPool::flush_page() DiskBufferPool::load_page(0, ...) - 将 header page 的内容加载到 frame (hdr_frame) 中 RecordFileHandler::init(DiskBufferPool) RecordFileHandler::init_free_pages() BufferPoolIterator::init(DiskBufferPool, 0)t1.table

{ "fields" : [ { "len" : 4, "name" : "__trx", "offset" : 0, "type" : "ints", "visible" : false }, { "len" : 4, "name" : "a", "offset" : 4, "type" : "ints", "visible" : true } ], "indexes" : null, "table_name" : "t1"}table_meta

- sys_fields

__trx - fields

__trx / a - record_size 8

t1.data (page 0)

┌────────┬─────────────────────────┬─────────────────────────┬────────┬────────┐│00000000│ 00 00 00 00 01 00 00 00 ┊ 01 00 00 00 01 00 00 00 │0000•000┊•000•000││00000010│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00004000│ ┊ │ ┊ │└────────┴─────────────────────────┴─────────────────────────┴────────┴────────┘- page_num

- BPFileHeader

- page_count

- allocated_pages

- bitmap

insert

insert into t1 values (1)

ResolveStage::handle_event() Stmt::create_stmt() InsertStmt::create() Db::find_table("t1") InsertStmt::InsertStmt()

ExecuteStage::do_insert() Table::insert_record(Value) Table::make_record() Table::insert_record(Record) RecordFileHandler::insert_record() - 得到 RID = page_num + slot_num DiskBufferPool::allocate_page() - 若找不到没有填满的页面,则分配一个新的页面 DiskBufferPool::allocate_frame(1, ...) DiskBufferPool::flush_page - extension file RecordPageHandler::init_empty_page(DiskBufferPool, 1, 8) RecordPageHandler::init(DiskBufferPool, 1) DiskBufferPool::get_this_page(1) init page_header and bitmap set page_header RecordPageHandler::insert_record() Table::insert_entry_of_indexes()t1.data (page 1)

┌────────┬─────────────────────────┬─────────────────────────┬────────┬────────┐│00000000│ 00 00 00 00 02 00 00 00 ┊ 02 00 00 00 03 00 00 00 │0000•000┊•000•000││00000010│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00004000│ 01 00 00 00 01 00 00 00 ┊ dd 07 00 00 08 00 00 00 │•000•000┊ו00•000││00004010│ 08 00 00 00 10 01 00 00 ┊ 01 00 00 00 00 00 00 00 │•000••00┊•0000000││00004020│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00004110│ 00 00 00 00 01 00 52 fc ┊ 01 00 00 00 00 00 00 00 │0000•0R×┊•0000000││00004120│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00008000│ ┊ │ ┊ │└────────┴─────────────────────────┴─────────────────────────┴────────┴────────┘- page_num

- PageHeader

- record_num = 1

- record_capacity = 2013

record_capacity * 8 + record_capacity / 8 + 1 <= 16384 - 4 - sizeof(struct PageHeader)

- record_real_size 对齐前

- record_size 对齐后

- first_record_offset = 272

align8(sizeof(struct PageHeader) + 2013 / 8 + 1)- 相对于 data 区的 offset,不包含 page_num

- bitmap

- records

- sys_fields 似乎是随机的

再插入一条记录 insert into t1 values (2)

┌────────┬─────────────────────────┬─────────────────────────┬────────┬────────┐│00000000│ 00 00 00 00 02 00 00 00 ┊ 02 00 00 00 03 00 00 00 │0000•000┊•000•000││00000010│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00004000│ 01 00 00 00 02 00 00 00 ┊ dd 07 00 00 08 00 00 00 │•000•000┊ו00•000││00004010│ 08 00 00 00 10 01 00 00 ┊ 03 00 00 00 00 00 00 00 │•000••00┊•0000000││00004020│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00004110│ 00 00 00 00 01 00 52 fc ┊ 01 00 00 00 e4 c1 52 d4 │0000•0R×┊•000××R×││00004120│ 02 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │•0000000┊00000000││00004130│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00008000│ ┊ │ ┊ │└────────┴─────────────────────────┴─────────────────────────┴────────┴────────┘select

ResolveStage::handle_event() Stmt::create_stmt() SelectStmt::create() collect tables collect query fields FilterStmt::create()

ExecuteStage::do_select() IndexScanOperator / TableScanOperator + PredicateOperator + ProjectOperator output (open -> next -> close)create index

create index i_a on t1 (a)

假设已有一条记录

ExecuteStage::do_create_index() Table::create_index("i_a", "a") IndexMeta::init("i_a", FieldMeta) BplusTreeIndex::create("./miniob/db/sys/t1-i_a.index", IndexMeta, FieldMeta) Index::init(IndexMeta, FieldMeta) BplusTreeHandler::create("./miniob/db/sys/t1-i_a.index", INTS, 4, -1, -1) BufferPoolManager::create_file("./miniob/db/sys/t1-i_a.index") BufferPoolManager::open_file("./miniob/db/sys/t1-i_a.index", ...) DiskBufferPool::allocate_page() MemPoolItem::init() - 用于构造和释放 key Table::scan_record() RecordFileScanner::open_scan() RecordFileScanner::next() IndexInserter::insert_index() BplusTreeIndex::insert_entry() BplusTreeHandler::insert_entry() modify TableMeta具体分析 BplusTreeHandler::insert_entry()

- user_key = 1

- rid = [page 1, slot 0]

key = user_key + rid

插入的是 (key, rid),对应的大小为 20

t1-i_a.index

┌────────┬─────────────────────────┬─────────────────────────┬────────┬────────┐│00000000│ 00 00 00 00 03 00 00 00 ┊ 03 00 00 00 07 00 00 00 │0000•000┊•000•000││00000010│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00004000│ 01 00 00 00 02 00 00 00 ┊ ff 03 00 00 32 03 00 00 │•000•000┊ו002•00││00004010│ 04 00 00 00 0c 00 00 00 ┊ 02 00 00 00 00 00 00 00 │•000_000┊•0000000││00004020│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00008000│ 02 00 00 00 01 00 00 00 ┊ 01 00 00 00 ff ff ff ff │•000•000┊•000××××││00008010│ ff ff ff ff ff ff ff ff ┊ 01 00 00 00 01 00 00 00 │××××××××┊•000•000││00008020│ 00 00 00 00 01 00 00 00 ┊ 00 00 00 00 00 00 00 00 │0000•000┊00000000││00008030│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││0000c000│ ┊ │ ┊ │└────────┴─────────────────────────┴─────────────────────────┴────────┴────────┘(page 1)

- page_num

- IndexFileHeader

- root_page = 2

- internal_max_size = (16384 - 12 [page type + item number + parent page id]) / 16 [attr len + RID + page num] = 1023

- leaf_max_size = (16384 - 20 [page type + item number + parent page id + prev and next brother]) / 20 [attr len + RID + RID] = 818

- attr_length = 4

- key_length = 12

- attr_type = INTS

(page 2)

- page_num

- header

- page type = is leaf

- item number = 1

- parent page id = -1

- prev and next brother = -1

- items

- key

- rid

在插入新的 record 时,会在 Table::insert_record 中调用 Table::insert_entry_of_indexes 更新索引

frontend

flex and bison

flex --header-file=lex.yy.h lex_sql.lbison -d -b yacc_sql yacc_sql.ychar

create table t1 (a char);create table t2 (a char(5));默认长度为 4

插入时,超过长度的部分会被截断,以下图 t2.data 为例,字段长度对齐为 16

插入 '111aa' / '222bb' / '111aabb' / '222' / '' 的结果如下

┌────────┬─────────────────────────┬─────────────────────────┬────────┬────────┐│00000000│ 00 00 00 00 02 00 00 00 ┊ 02 00 00 00 03 00 00 00 │0000•000┊•000•000││00000010│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00004000│ 01 00 00 00 05 00 00 00 ┊ f6 03 00 00 09 00 00 00 │•000•000┊ו00_000││00004010│ 10 00 00 00 98 00 00 00 ┊ 1f 00 00 00 00 00 00 00 │•000×000┊•0000000││00004020│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00004090│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 d5 4f 92 da │00000000┊0000×O××││000040a0│ 31 31 31 61 61 00 00 00 ┊ 00 00 00 00 03 c0 91 fa │111aa000┊0000•×××││000040b0│ 32 32 32 62 62 00 00 00 ┊ 00 00 00 00 01 40 92 fa │222bb000┊0000•@××││000040c0│ 32 32 32 62 62 00 00 00 ┊ 00 00 00 00 04 00 92 fa │222bb000┊0000•0××││000040d0│ 32 32 32 00 00 00 00 00 ┊ 00 00 00 00 04 c0 91 fa │22200000┊0000•×××││000040e0│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00008000│ ┊ │ ┊ │└────────┴─────────────────────────┴─────────────────────────┴────────┴────────┘具体逻辑在 Table::make_record 中

miscellaneous

代码是写给人看的

-

clang-format

-

TODO

TODO(chi): cast integer null to other types- commit message

http://www.ruanyifeng.com/blog/2016/01/commit_message_change_log.html

https://github.com/commitizen-tools/commitizen

tech

output parameters

返回值类型统一为 RC,为了返回更多的信息,使用 output parameters 技术

Exception vs. RC

golang

defer

启发于 golang

#pragma once

#include <functional>

namespace common {

class DeferHelper {public: DeferHelper(const std::function<void()> defer) : defer_(std::move(defer)) {}

~DeferHelper() { if (defer_) { defer_(); } }

private: const std::function<void()> defer_;};

} // namespace common

#define DERFER_NAME(suffix) defer_helper_##suffix#define DEFER(callback) common::DeferHelper DERFER_NAME(__LINE__)(callback)adapter pattern

RecordReaderScanAdapter

接口适配

sql design

对于 DDL 而言,bind 之后可以直接执行

主要考虑 DML

nanodb

- 基本流程

ANTLR parser -> NanoSQLTranslator -> prepare plan -> planner -> executor

语法规则似乎不必手写

- 数据结构的演化

StringParseTreeCommandPlanNodebustub

调试,重定向输入

- 基本流程

postgres parser -> binder (transformer) -> planner -> optimizer -> executor

- 数据结构的演化

std::stringduckdb_libpgquery::PGList *BoundStatement BoundTableRef BoundExpressionAbstractPlanNodeRef = std::shared_ptr<const AbstractPlanNode>TableReferenceType

enum class TableReferenceType : uint8_t { INVALID = 0, /**< Invalid table reference type. */ BASE_TABLE = 1, /**< Base table reference. */ JOIN = 3, /**< Output of join. */ CROSS_PRODUCT = 4, /**< Output of cartesian product. */ EXPRESSION_LIST = 5, /**< Values clause. */ SUBQUERY = 6, /**< Subquery. */ EMPTY = 8 /**< Placeholder for empty FROM. */};ExpressionType

enum class ExpressionType : uint8_t { INVALID = 0, /**< Invalid expression type. */ CONSTANT = 1, /**< Constant expression type. */ COLUMN_REF = 3, /**< A column in a table. */ TYPE_CAST = 4, /**< Type cast expression type. */ FUNCTION = 5, /**< Function expression type. */ AGG_CALL = 6, /**< Aggregation function expression type. */ STAR = 7, /**< Star expression type, will be rewritten by binder and won't appear in plan. */ UNARY_OP = 8, /**< Unary expression type. */ BINARY_OP = 9, /**< Binary expression type. */ ALIAS = 10, /**< Alias expression type. */};miniob

- 数据结构的演化

const char *QueryStmtOperator- group by with aggregation

max / min / count / avg

聚合函数中的参数不会是表达式

思路参考 NanoDB Assignments

- order by

在内存中提前排序

- join

left deep tree

递归解析

字段拼接

- expr

算术表达式的语法规则

- sub query

env 机制

只有 where subquery

in / not in - 向量

cmp - 标量

schema 应匹配

cmake

官方写的 CMakeLists.txt 实在有点无语,稍微修改了一下

if (NOT CMAKE_BUILD_TYPE) set(CMAKE_BUILD_TYPE Debug)endif()

# generate compile_commands.jsonset(CMAKE_EXPORT_COMPILE_COMMANDS ON)

add_compile_options(-fsanitize=address -Wall)add_link_options(-fsanitize=address)使用如下命令构建

rm -rf build && cmake -B build -DCMAKE_EXPORT_COMPILE_COMMANDS=ON -DCMAKE_BUILD_TYPE=ON && cmake --build build -- -j $(nproc)perf

inner join 的最后一个测试用例超时,考虑使用 perf 跑个火焰图

参考了 Use perf and FlameGraph to profile program on Linux | Nan Xiao’s Blog

发现对 predicate 求值的时间太长,于是考虑谓词下推

clog

分 block 存储,第一个 block 为文件头,每个 block 为 512 字节

buffer 为 4096 字节

insert into t1 values (1)

┌────────┬─────────────────────────┬─────────────────────────┬────────┬────────┐│00000000│ 08 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │•0000000┊00000000││00000010│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00000200│ 00 02 00 00 60 00 08 00 ┊ 00 00 00 00 01 00 00 00 │0•00`0•0┊0000•000││00000210│ 03 00 00 00 50 00 00 00 ┊ 74 31 00 00 00 00 00 00 │•000P000┊t1000000││00000220│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││00000230│ 00 00 00 00 00 00 00 00 ┊ 01 00 00 00 00 00 00 00 │00000000┊•0000000││00000240│ 0c 00 00 00 00 00 00 00 ┊ 00 00 00 00 01 00 00 00 │_0000000┊0000•000││00000250│ 01 00 00 00 00 00 00 00 ┊ 50 00 00 00 01 00 00 00 │•0000000┊P000•000││00000260│ 02 00 00 00 10 00 00 00 ┊ 00 00 00 00 00 00 00 00 │•000•000┊00000000││00000270│ 00 00 00 00 00 00 00 00 ┊ 00 00 00 00 00 00 00 00 │00000000┊00000000││* │ ┊ │ ┊ ││00000400│ ┊ │ ┊ │└────────┴─────────────────────────┴─────────────────────────┴────────┴────────┘insert

- header

- lsn - 0

- trx id - 1

- type - REDO_INSERT

- log record len - 80

- table name - t1

- rid - [page 1, slot 0]

- data len - 12

- data

- padding - align to 8

commit

- header

- lsn - 80

- trx id - 1

- type - REDO_MTR_COMMIT

- log record len - 16

commit 后,clog 会落盘,此时数据尚未落盘

restart server 后,server 会根据 clog 恢复出 CLogRecord,然后进行如下处理

void CLogMTRManager::log_record_manage(CLogRecord *log_rec){ if (log_rec->get_log_type() == REDO_MTR_COMMIT) { trx_commited[log_rec->get_trx_id()] = true; delete log_rec; } else if (log_rec->get_log_type() == REDO_MTR_BEGIN) { trx_commited.insert({log_rec->get_trx_id(), false}); delete log_rec; } else { log_redo_list.push_back(log_rec); }}这里说明在非 trx multi operation mode 下,不必手动添加 REDO_MTR_BEGIN 类型的 CLogRecord

之后遍历 log_redo_list,进行如下处理

switch(clog_record->get_log_type()) { case CLogType::REDO_INSERT: { char *record_data = new char[clog_record->log_record_.ins.data_len_]; memcpy(record_data, clog_record->log_record_.ins.data_, clog_record->log_record_.ins.data_len_); Record record; record.set_data(record_data); record.set_rid(clog_record->log_record_.ins.rid_);

rc = table->recover_insert_record(&record); delete[] record_data; } break; case CLogType::REDO_DELETE: { Record record; record.set_rid(clog_record->log_record_.del.rid_); rc = table->recover_delete_record(&record); } break; default: { rc = RC::SUCCESS; }}一个简单的测试用例

client1

create table t(id int);begin;insert into t values(1);insert into t values(2);client2

begin;insert into t values(3);update t set id = 4 where id = 2;client1

select * from t;commit;restart server

client1

select * from t;CREATE TABLE aggregation_func(id int, num int, price float, addr char, birthday date);INSERT INTO aggregation_func VALUES (1, 18, 10.0, 'abc', '2020-01-01');INSERT INTO aggregation_func VALUES (2, 15, 20.0, 'abc', '2010-01-11');INSERT INTO aggregation_func VALUES (3, 12, 30.0, 'def', '2021-01-21');INSERT INTO aggregation_func VALUES (4, 15, 30.0, 'dei', '2021-01-31');INSERT INTO aggregation_func VALUES (5, 18, 10.0, '1abc', '2022-01-01');restart server

client1

select * from t;refactor

sql parser

前端解析考虑使用开源的 parser,这里使用了 hsql

需要单独处理 insert value 中出现负数字面量

同时,考虑到复杂表达式在语法分析过程中,其原始形式的信息会丢失,所以考虑手写一个简单的 parser 解析 select values 及其 aliases

binder

经过 hsql 解析后的 sql 命令已经有了较好的形式,但是为了后端处理的方便,需要通过 binder 进行进一步转换

例如对于 select 语句,hsql 会解析为如下的结构体

struct SelectStatement : SQLStatement { SelectStatement(); ~SelectStatement() override;

TableRef *fromTable; bool selectDistinct; std::vector<Expr *> *selectList; Expr *whereClause; GroupByDescription *groupBy;

std::vector<SetOperation *> *setOperations;

std::vector<OrderDescription *> *order; std::vector<WithDescription *> *withDescriptions; LimitDescription *limit; std::vector<LockingClause *> *lockings;};需要根据 TableRef 得到 from schema,建立 enclosing schema,从而在子查询中能够对 column expr 求值

需要将所有的 Expr 转换为 AbstractExpression,其中提供了 eval / traverse 等方法

planner

这一部分和 nanodb 类似,不多赘述

PlanNode plan = null;

if (FROM-clause is present) plan = generate_FROM_clause_plan();

if (WHERE-clause is present) plan = add_WHERE_clause_to(plan);

if (GROUP-BY-clause and/or HAVING-clause is present) plan = handle_grouping_and_aggregation(plan);

if (ORDER-BY-clause is present) plan = add_ORDER_BY_clause_to(plan);

// There's always a SELECT clause of some sort!plan = add_SELECT_clause_to(plan);由于中间表示的不同,需要单独为 update 和 delete 语句写一个简单的 planner

executor

执行阶段会根据 sql 语句是 DDL 还是 DML 进行相应的处理

对于 DML 语句,会构造相应的 binder 和 planner,最后对 plan node 调用下述接口

virtual RC prepare() = 0;virtual RC initialize() = 0;virtual RC next() = 0;virtual RC current_tuple(TupleRef &tuple) = 0;schema and tuple

这一部分位于 sql 层的 table 包中

Schema 就是 Column 的列表,Column 中包含的信息与框架代码中的 FieldMeta 类似,由于 FieldMeta 会与 json 文件进行序列化和反序列化,所以添加了 Column 这个类

Tuple 类和框架代码中的 Record 类似,为了获取 tuple 中的 value 信息,需要提供 schema,以调用 type system 中的 deserialize_from 方法

type system

参考了 bustub 的设计

Type 类提供了类型的单例,Type 类内部抽象了 value 和 values 之间会进行的操作

对于一个 Value 类的实例,只需根据自身获取类型的单例,然后调用 Type 类的抽象方法即可

这其中便涉及 implicit typecast 和 null comparison 等问题

对于 null comparison,miniob 规定 null 与任何数据对比都是 false

一般而言,null 与任何数据对比都是 unknown

于是在 in expr 中,若 lhs 或 rhs 中存在 null value

select * from t where null in (null);select * from t where null not in (null);则求值的结果应为 false,然而注意到这里有个 not 取反,所以这里需要引入 unknown 三值逻辑

简单起见,若 bool value 的 is null 字段为 true,则认为是 unknown

在布尔值计算的根节点,判断若为 unknown 则计算为 false

对于三值逻辑,考虑有一个元组

(1)的表t思考,unknown 的比较运算

function

在目前的题目中,function 可以分为 simple function 和 aggregate function,这两者均属于 scalar function,即返回值为标量

ScalarFunction 类提供 get_return_type 获取返回值类型

function 在 binder 中会转换为 FunctionCall,其中包含了对应的 AbstractFunction

对于 aggregate function,在 group aggregate node 中会将 FunctionCall 转换为 ColumnValueExpression,所以在其 FunctionCall 的 evaluate 方法中,只需根据 schema 获取对应的 column value 即可

对于 simple function,在其 FunctionCall 的 evaluate 方法中,应调用 SimpleFunction 提供的 evaluate 方法,其对应 expr 的类型不会进行转换

值得注意的一个 function 是 round,会对浮点数四舍五入到指定的精度,然而,前端解析和存储浮点数时可能存在误差,例如

select round(2.5);,其中的 2.5 会被存储为 2.4999,此时后端就会四舍五入为 2,与实际不符,所以一种面向用例的解决方案是在后端多次 round,即round(2.4999*)实际的执行流为round(round(2.4999*, 1), 0)

visitor pattern

主要体现在 AbstractExpression 中 traverse 方法,该方法入参为 ExpressionProcessor,其中定义了 enter 和 leave 接口,enter 和 leave 接口的入参为 AbstractExpression

这样不同类型的 expr 只需实现 enter 和 leave 接口,就可以传入不同的 ExpressionProcessor,对 expr 进行处理

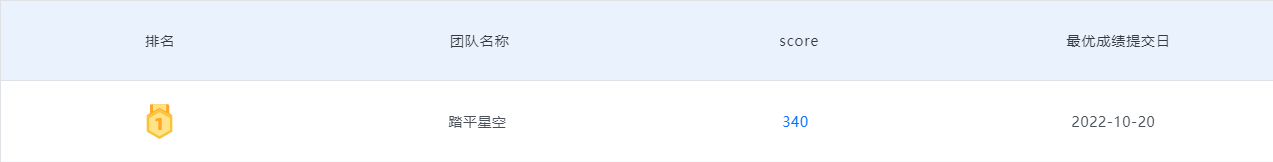

ranking